Omar Chehab

Bonjour! Welcome to my website. I am a postdoc at Carnegie Mellon University, in the Machine Learning Department , working with Pradeep Ravikumar .

I completed my graduate studies in France: this includes a PhD in Mathematical Computer Science at Inria with Aapo Hyvärinen and Alexandre Gramfort, and a postdoc in the Statistics Department of CREST-ENSAE with Anna Korba .

More details are listed in my CV and on this profile page. You can reach me via email, find my code on GitHub, browse my publications on Google Scholar, or connect on LinkedIn.

Research Talks Notes Teaching Service

ResearchMy research is in machine learning, with the following goals:

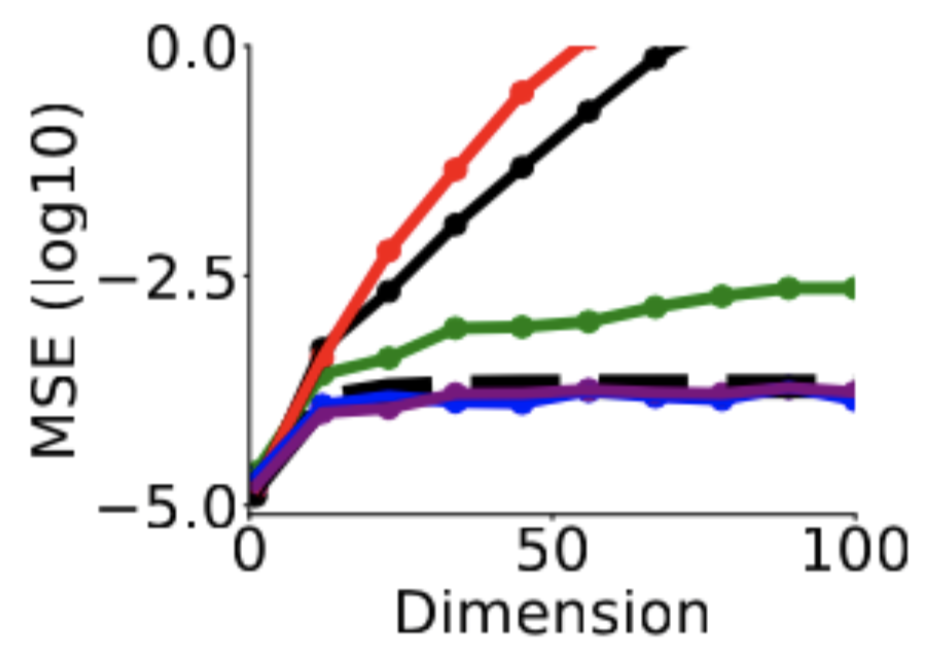

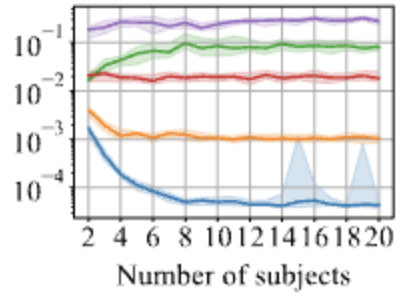

The algorithms I work with to achieve these goals range from diffusions and flows to multi-view independent component analysis. All of the above goals can be framed as learning a probability distribution. A central question guiding my work is: “How much compute and data are required to achieve a given level of accuracy?”. For which distributions does this work or fail. And how does the algorithmic design impact this. In other words, I aim to quantify the computational and sample efficiency of these algorithms. I study these questions across multiple data modalities: synthetic, image, and brain data. The synthetic data is designed to have certain statistical properties (e.g., multimodality); the standard image datasets are commonly used as benchmarks in the academic community, and the non-invasive brain recordings were central to my PhD work and remain an active research direction.

Energy-Based Models

Diffusion and Flow Models

Sampling Algorithms

Score Matching

Density Ratio Estimation

Causal Discovery

Representation Learning

Brain Imaging

Multi-View Independent Component Analysis

|

Sampling from Energy-Based Statistical Models |

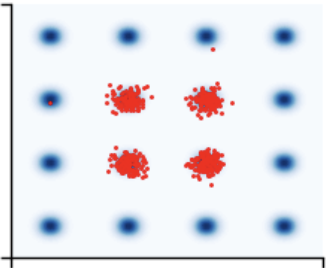

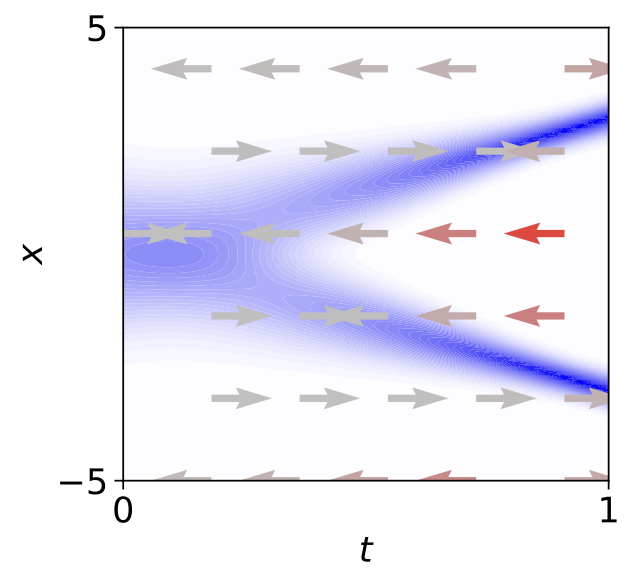

Time-reversed diffusions are state-of-the-art for sampling multi-modal distributions, but they rely on score estimates. We analyze how estimation errors affect the final samples.

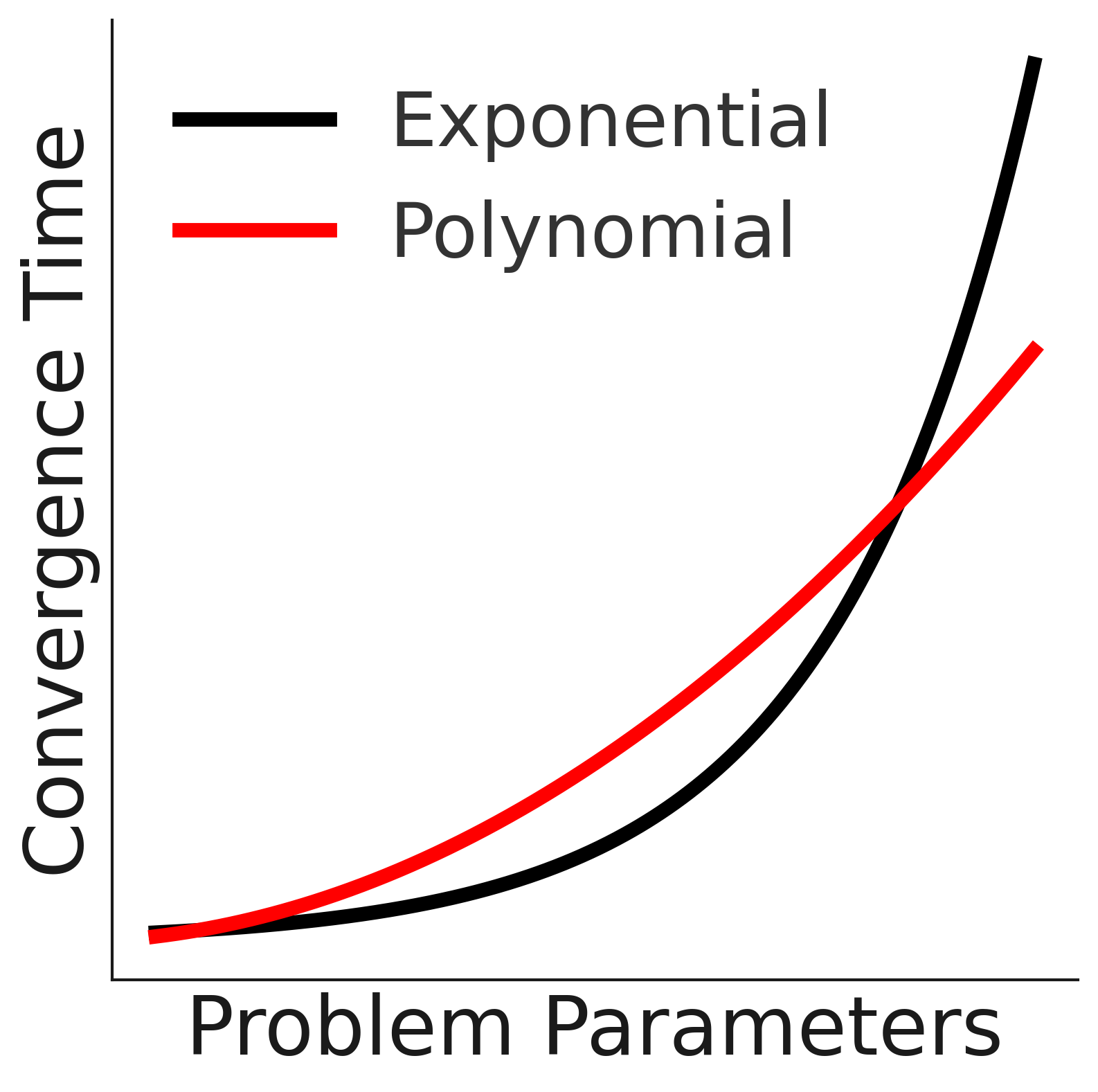

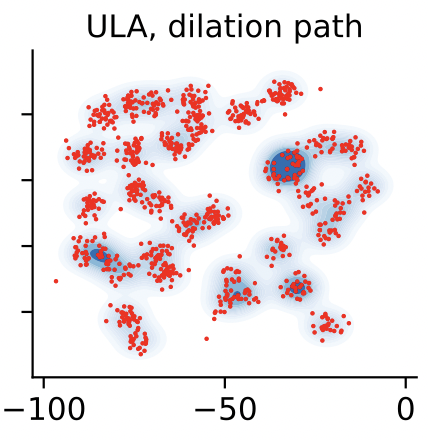

Annealed MCMC tries to approximate a prescribed path of distributions. We show that the popular geometric mean path with a Gaussian has unfavorable geometry. Presented at the Yale workshop on sampling.

Time-reversed diffusions are state-of-the-art in sampling but rely on score estimates. We aim to reduce their variance.

Estimating Energy-Based Statistical Models |

We explore the design choices of a method called CNCE for learning energy-based models.

Annealed Importance Sampling uses a prescribed path of distributions to compute an estimate of a normalizing constant. We quantify how the choice of path impacts the estimation error.

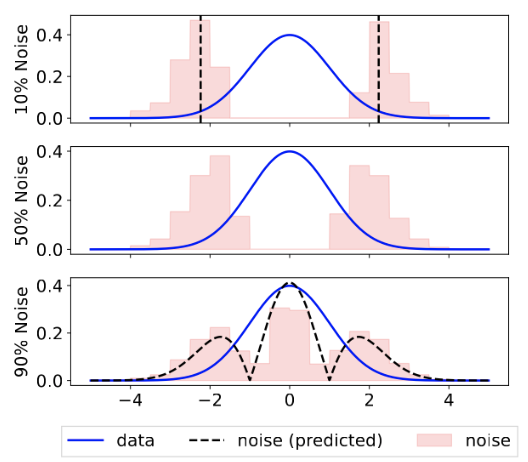

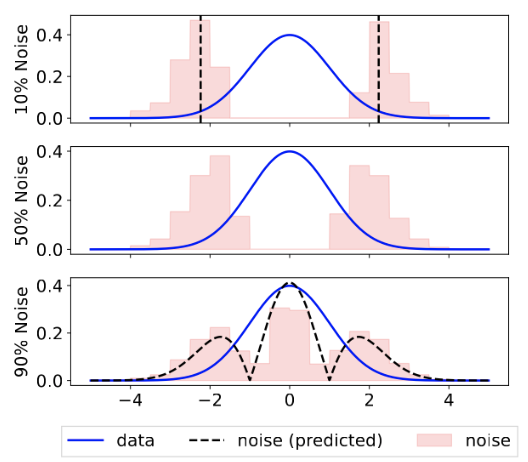

NCE estimates the data density by minimizing a binary classification loss, between data and noise samples. We find the optimal noise distribution that minimizes the estimation error.

Learning Representations of Brain Activity |

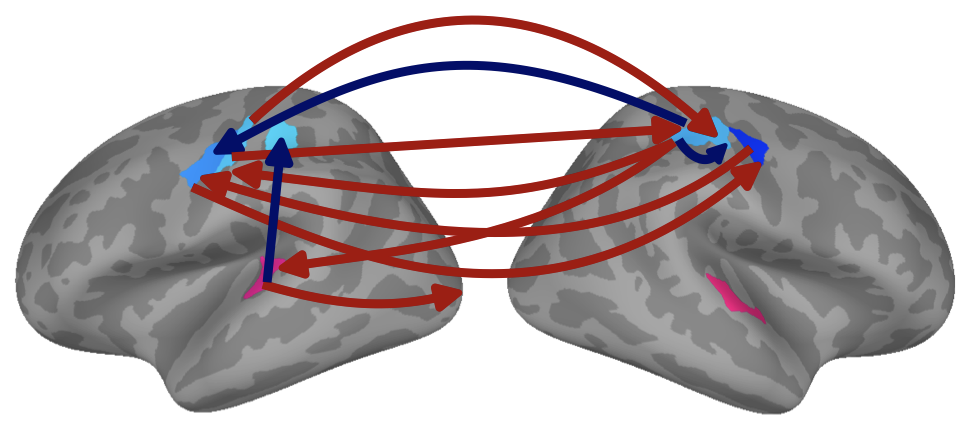

We learn causal relationships (Directed Acyclic Graph) between random variables collected from different environments.

Independent Component Analysis (ICA) is a popular algorithm for learning a representation of data. We propose a version that handles data collected from different contexts, and whose representations differ only by temporal delays or dilations.

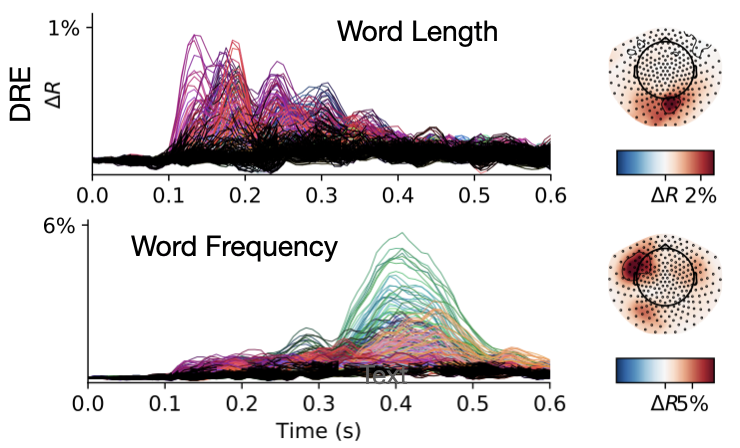

We compare different models for predicting the brain’s response to external stimuli. Our model, based on a deep neural network, is more accurate and interpretable.

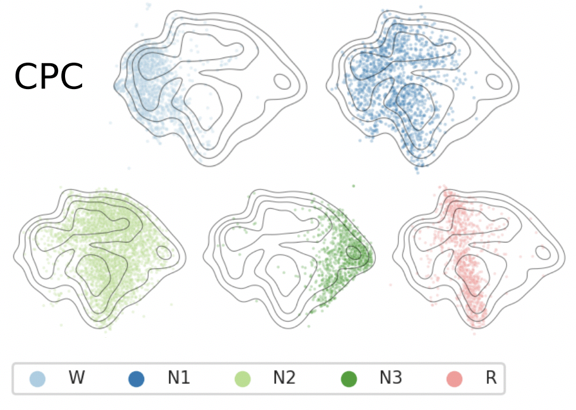

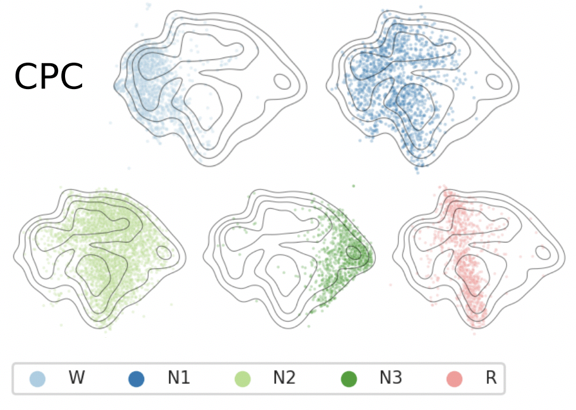

We learn rich representations of EEG brain activity using a self-supervised loss.

We learn rich representations of EEG brain activity using a self-supervised loss.

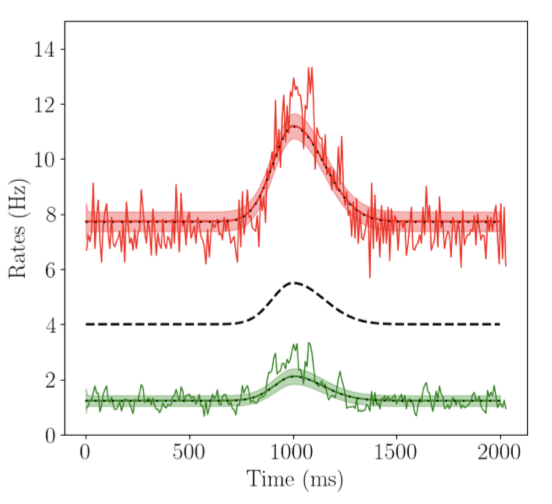

Our theory predicts the average behavior of neuronal populations that fire asynchronously.

Talks

|

NotesCheatsheet of computer commands |

TeachingI was a teaching assistant for the following Master’s courses.

Optimization for Data Science

2021-2023

Institut Polytechnique de Paris

Professors: Alexandre Gramfort, Pierre Ablin

Advanced Machine Learning

2020-2022

CentraleSupelec, Universite Paris-Saclay

Professors: Emilie Chouzenoux, Frederic Pascal

Optimization

2020-2021

CentraleSupelec, Universite Paris-Saclay

Professors: Jean-Christophe Pesquet, Sorin Olaru, Stephane Font

|

ServiceI review submissions to the following machine learning conferences: NeurIPS, ICML, ICLR and AISTATS. I also occasionally review submissions to these journals of statistics or machine learning: JMLR, TMRL, AISM, and Mathematical Methods of Statistics. I am grateful to have been recognized as a "top reviewer" (AISTATS 2022, NeurIPS 2022-23-24). |

|

Design and source code from Jon Barron's website |