Omar ChehabAs of June 2025, I joined the Machine Learning Department at Carnegie Mellon University, as a Postdoctoral Research Associate in Pradeep Ravikumar’s team. My previous postdoc was in the Statistics Department of CREST-ENSAE, working with Anna Korba’s team. In November 2023, I completed my PhD in mathematical computer science at Inria, where I was advised by Aapo Hyvärinen and Alexandre Gramfort. My graduate studies led to a Master’s in engineering from ENSTA Paris and in Applied Maths, Vision and Learning (MVA) from ENS Paris-Saclay. Email / GitHub / Google Scholar / LinkedIn |

|

ResearchMy research is in machine learning, particularly on efficient algorithms for estimating and sampling from (energy-based) statistical models, as well as on learning representations of brain activity. |

Sampling from Energy-Based Statistical Models |

|

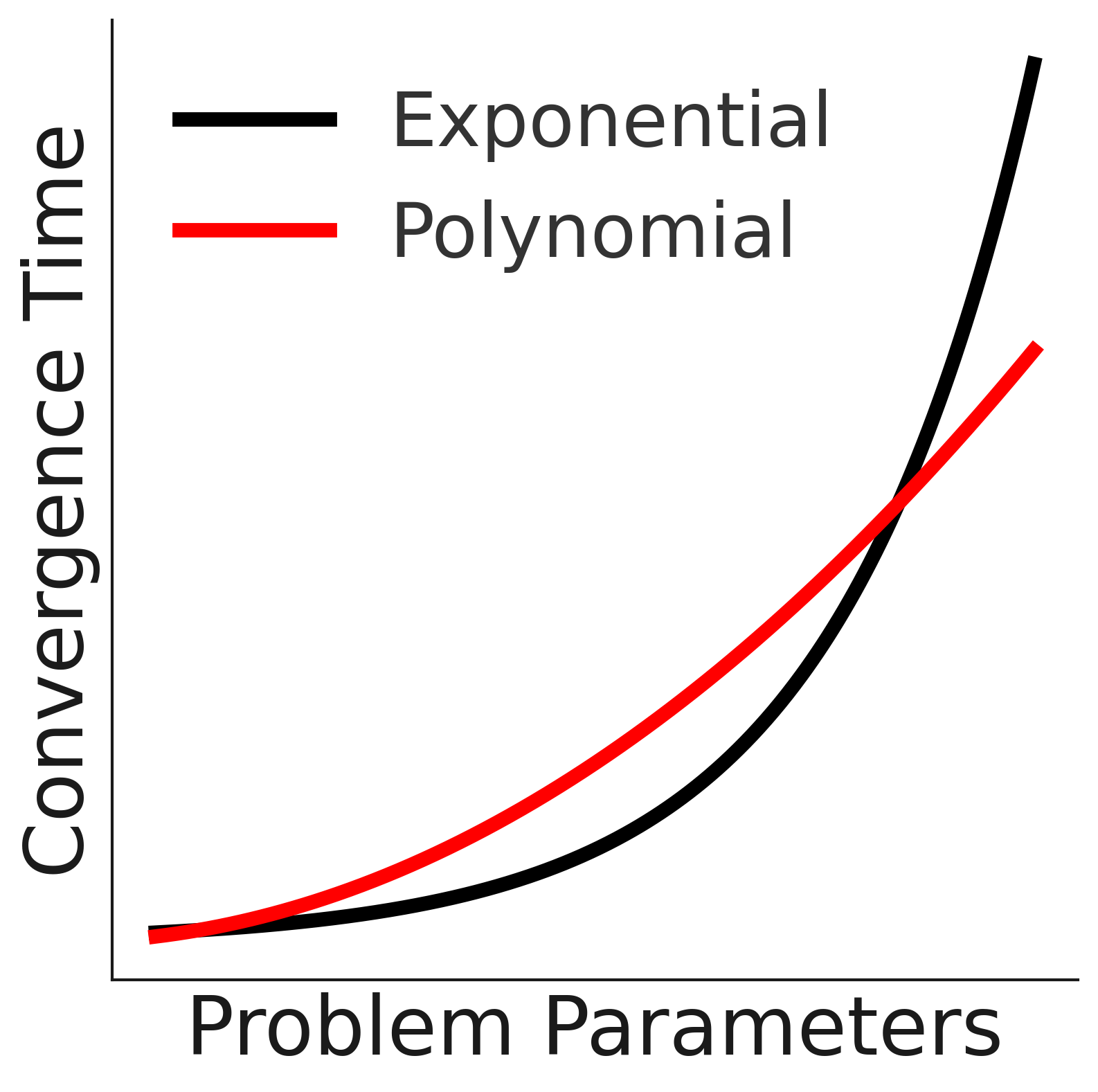

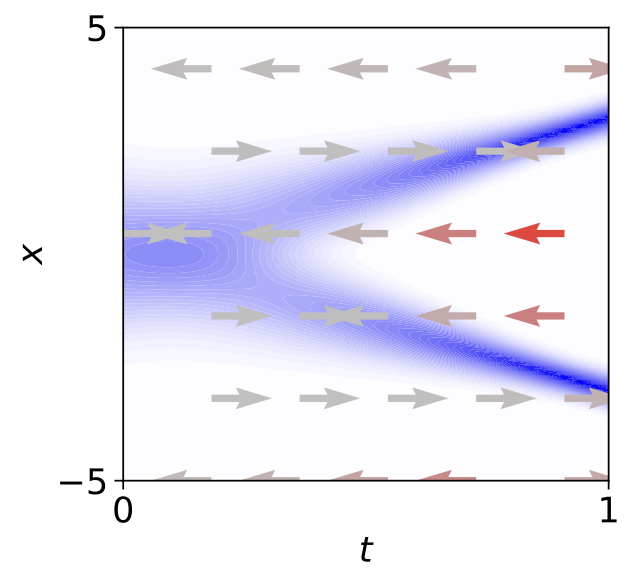

Sampling from multi-modal distributions with polynomial query complexity in fixed dimension via reverse diffusionAdrien Vacher, Omar Chehab, Anna Korba Conference on Neural Information Processing Systems (NeurIPS), 2025 arxiv / poster / Time-reversed diffusions are state-of-the-art for sampling multi-modal distributions, but they rely on score estimates. We analyze how estimation errors affect the final samples. |

|

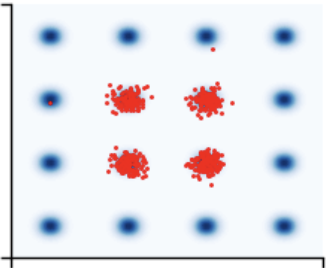

Provable Convergence and Limitations of Geometric Tempering for Langevin DynamicsOmar Chehab, Anna Korba, Austin Stromme, Adrien Vacher International Conference on Learning Representations (ICLR), 2025 arxiv / poster / Annealed MCMC tries to approximate a prescribed path of distributions. We show that the popular geometric mean path with a Gaussian has unfavorable geometry. Presented at the Yale workshop on sampling. |

|

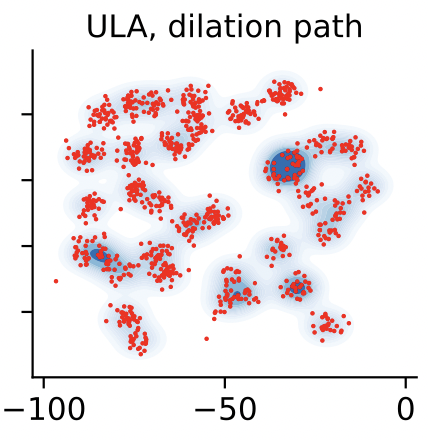

A Practical Diffusion Path for SamplingOmar Chehab, Anna Korba Workshop on Structured Probabilistic Inference & Generative Modeling, International Conference on Machine Learning (ICML), 2024 arxiv / poster / Time-reversed diffusions are state-of-the-art in sampling but rely on score estimates. We aim to reduce their variance. |

Estimating Energy-Based Statistical Models |

|

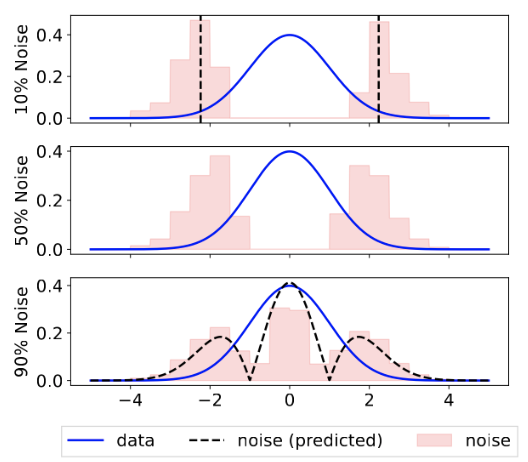

Conditional Noise-Contrastive Estimation of Energy-Based Models by Jumping Between ModesHanlin Yu, Michael U. Gutmann, Arto Klami, Omar Chehab Workshop on Principles of Generative Modeling, EurIPS, 2025 arxiv / We explore the design choices of a method called CNCE for learning energy-based models. |

|

Density Ratio Estimation with Conditional Probability PathsHanlin Yu, Arto Klami, Aapo Hyvärinen, Anna Korba, Omar Chehab International Conference on Machine Learning (ICML), 2025 arxiv / code / poster / A density ratio can be obtained by integrating the time score of a probability path. We present an efficient way to estimate the time score. |

|

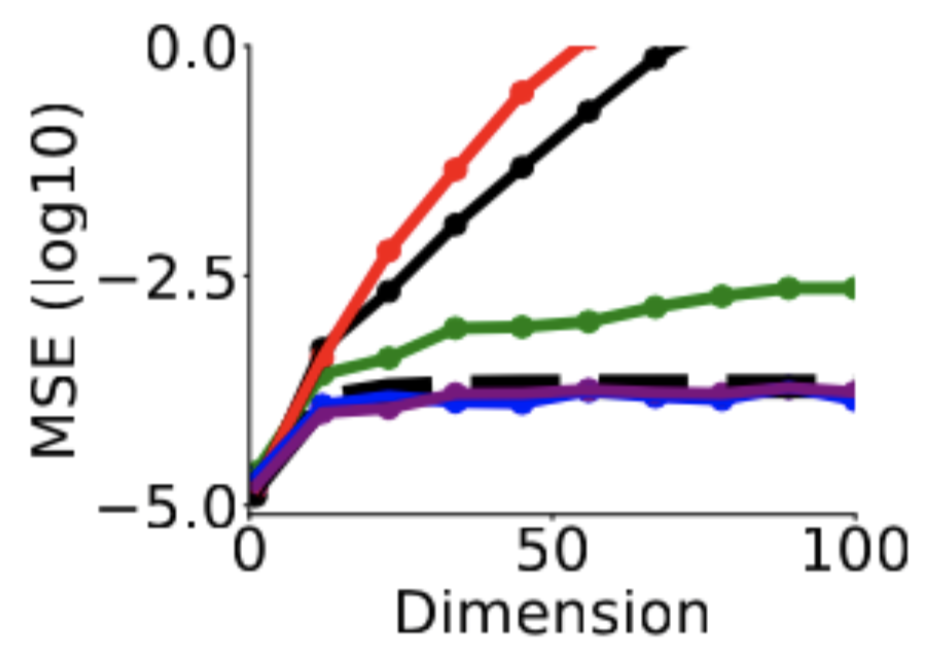

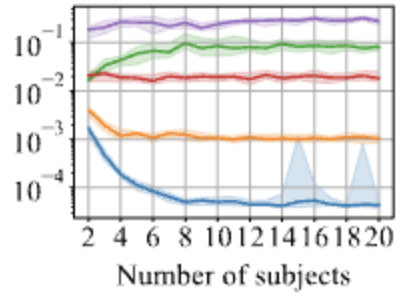

Provable benefits of annealing for estimating normalizing constants: Importance Sampling, Noise-Contrastive Estimation, and beyondOmar Chehab, Aapo Hyvärinen, Andrej Risteski Spotlight, Conference on Neural Information Processing Systems (NeurIPS), 2023 arxiv / code / poster / Annealed Importance Sampling uses a prescribed path of distributions to compute an estimate of a normalizing constant. We quantify how the choice of path impacts the estimation error. |

|

The Optimal Noise in Noise-Contrastive Learning Is Not What You ThinkOmar Chehab, Alexandre Gramfort, Aapo Hyvärinen Conference on Uncertainty in Artificial Intelligence (UAI), 2022 arxiv / code / poster / NCE estimates the data density by minimizing a binary classification loss, between data and noise samples. We find the optimal noise distribution that minimizes the estimation error. |

Learning Representations of Brain Activity |

|

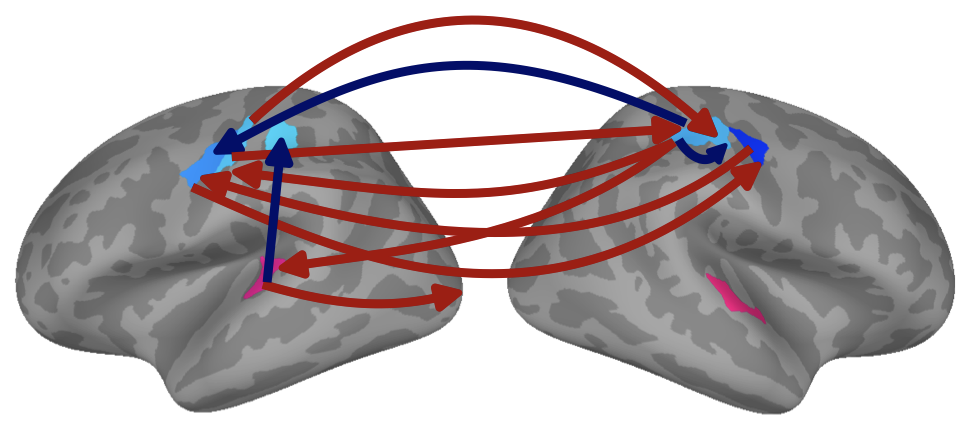

Multi-View Causal Discovery without Non-Gaussianity: Identifiability and AlgorithmsAmbroise Heurtebise, Omar Chehab, Pierre Ablin, Alexandre Gramfort, Aapo Hyvärinen Oral, Workshop on Causality for Impact - Practical challenges for real-world applications of causal methods, EurIPS, 2025 arxiv / We learn causal relationships (Directed Acyclic Graph) between random variables collected from different environments. |

|

MVICAD2: Multi-View Independent Component Analysis with Delays and DilationsAmbroise Heurtebise, Omar Chehab, Pierre Ablin, Alexandre Gramfort IEEE Transactions on Biomedical Engineering, 2025 arxiv / code / Independent Component Analysis (ICA) is a popular algorithm for learning a representation of data. We propose a version that handles data collected from different contexts, and whose representations differ only by temporal delays or dilations. |

|

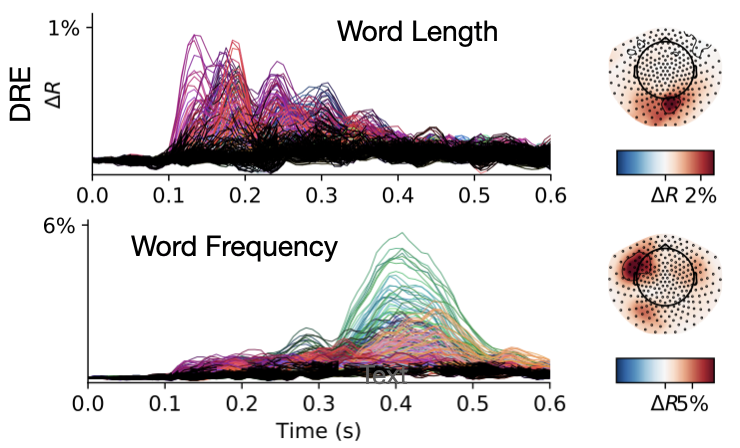

Deep Recurrent Encoder: an end-to-end network to model magnetoencephalography at scaleOmar Chehab*, Alexandre Defossez*, Jean-Christophe Loiseau, Alexandre Gramfort, Jean-Remi King Journal of Neurons, Behavior, Data analysis, and Theory, 2022 arxiv / code / We compare different models for predicting the brain’s response to external stimuli. Our model, based on a deep neural network, is more accurate and interpretable. |

|

Learning with self-supervision on EEG dataAlexandre Gramfort, Hubert Banville, Omar Chehab, Aapo Hyvärinen, Denis Engemann IEEE workshop on Brain-Computer Interface, 2021 arxiv / We learn rich representations of EEG brain activity using a self-supervised loss. |

|

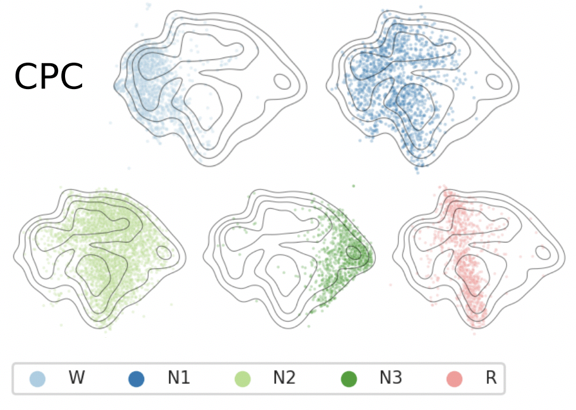

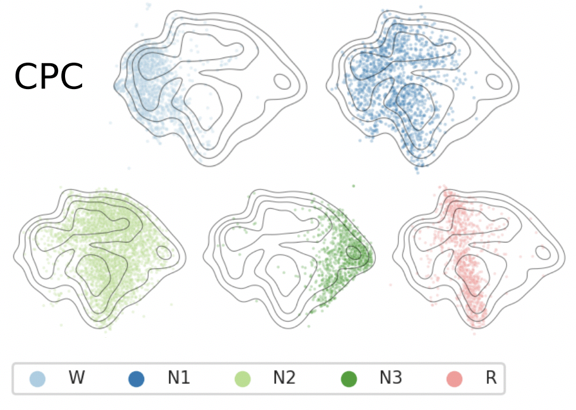

Uncovering the structure of clinical EEG signals with self-supervised learningHubert Banville, Omar Chehab, Aapo Hyvärinen, Denis Engemann, Alexandre Gramfort Journal of Neural Engineering, 2021 arxiv / We learn rich representations of EEG brain activity using a self-supervised loss. |

|

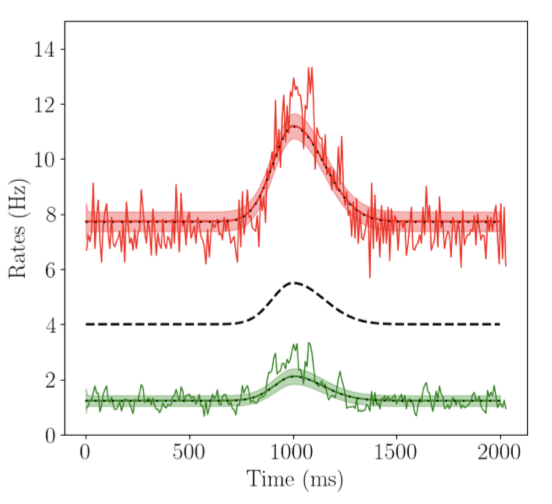

A mean-field approach to the dynamics of networks of complex neurons, from nonlinear Integrate-and-Fire to Hodgkin–Huxley modelsMallory Carlu, Omar Chehab, Leonardo Dalla Porta, Damien Depannemaecker, Charlotte Héricé, Maciej Jedynak, Elif Köksal Ersöz, Paulo Muratore, Selma Souihe, Cristiano Capone, Yann Zerlaut, Alain Destexhe, Matteo di Volo Journal of Neurophysiology, 2020 arxiv / Our theory predicts the average behavior of neuronal populations that fire asynchronously. |

Talks

* 03/2026, Workshop on Gradient Flows for Uncertainty Quantification, SIAM Conference, USA

|

TeachingI was Teacher's Assistant for the following Masters courses.

|

ServiceI review submissions to the following machine learning conferences: NeurIPS, ICML, ICLR and AISTATS. I occasionally review submissions to the following journals: the Journal of Machine Learning Research (JMLR), the Annals of the Institute of Statistical Mathematics (AISM), and Mathematical Methods of Statistics. I am grateful to have been recognized as a "top reviewer" (AISTATS 2022, NeurIPS 2022-23-24). |

|

Design and source code from Jon Barron's website |